AWS Lambda runs your code on a highly available and scalable compute infrastructure so that you can focus on what you want to build. Here we will understand how to run Docker-based workloads to AWS lambda.

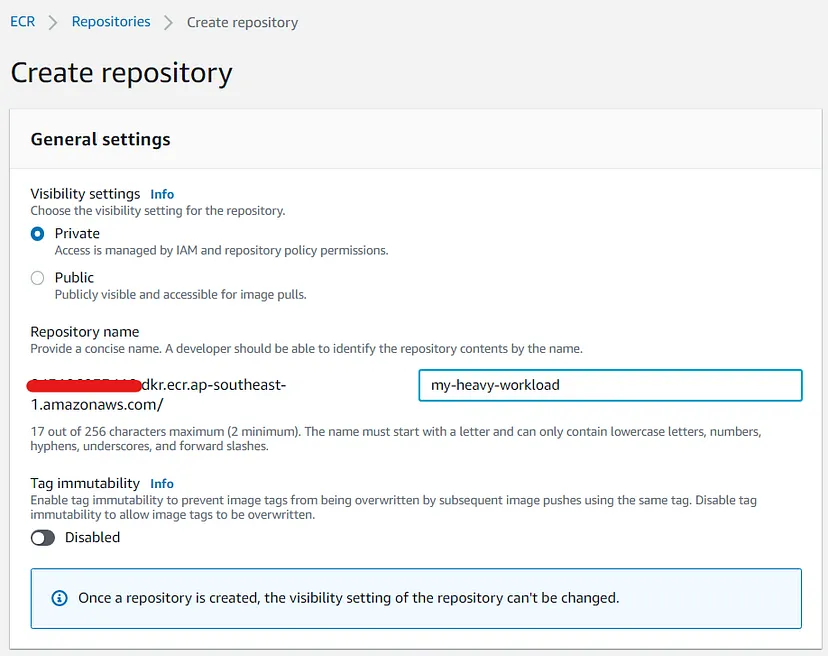

Step 1: Create an ECR Repository

Amazon ECR registries host your container images in a highly available and scalable architecture, allowing you to deploy containers reliably for your applications.

Using the AWS console navigate to Amazon ECR registries and create a repository this will be used to hold your Docker Image.

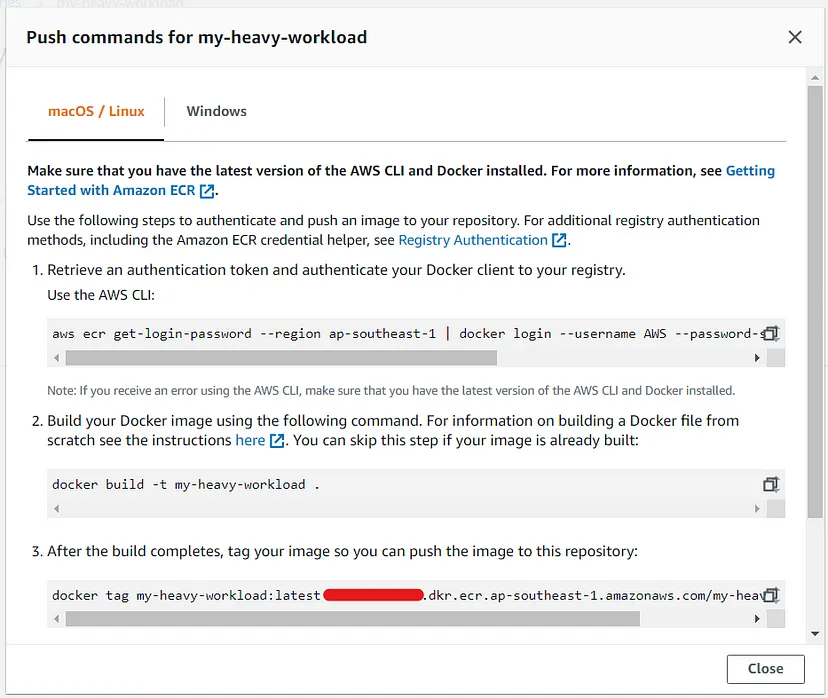

Once your repository is up you can easily copy and use the command to copy and push a new docker Images.

Step 2: Create a new Docker Image

Now it’s time to create a new docker image. For the sake of simplicity, we would be using a simple Hello World docker that you can extend your use case needs.

Here is a sample if you want to use a sample docker provided by AWS.

FROM public.ecr.aws/lambda/nodejs:12

COPY app.js ${LAMBDA_TASK_ROOT}

CMD [ "app.handler" ]

Sample app.js file would look like typical Lambda Node Js runtime

exports.handler = async (event) => {

return {statusCode: 200, body: JSON.stringify('Hello from Lambda!')};

};

If you want to shift your Python or R workloads using a custom docker image, Base docker is expected to hold Python3 and R if needed.

FROM my-base-docker-uri:x.y.z

COPY app.py ${LAMBDA_TASK_ROOT}

# Python 3 awslambdaric installations

RUN pip3 install awslambdaric

ENTRYPOINT [ "/usr/local/bin/python", "-m", "awslambdaric" ]

CMD [ "app.handler" ]

Sample app.py file would look like typical Lambda Python runtime

import json

def handler(event, context):

# For Rscript

# import os

# os.system("Rscript work.R")

return {'statusCode': 200, 'body': json.dumps({ 'result': 'Hello from Lambda!'})}

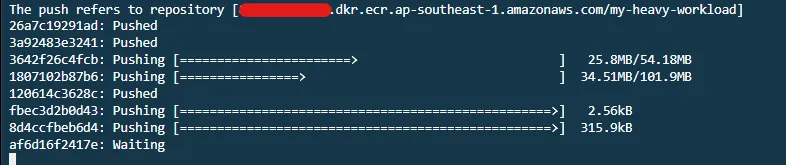

Once you are done with setting up your docker you would be required to push it to AWS ECR.

First, build and tag your docker as the latest

docker build -t my-heavy-workload .

docker tag my-heavy-workload:latest xxxxxxxxxx.dkr.ecr.ap-southeast-1.amazonaws.com/my-heavy-workload:latest

And finally, push it to AWS ECR using commands given by AWS ECR, already mentioned in Step 1.

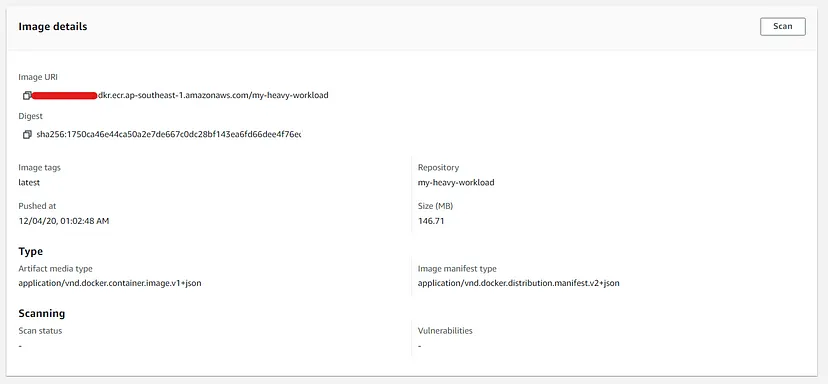

After your image has been pushed successfully check your ECR Registry for image details. Note the Digest would be used to link your image to Lambda Deployments.

Step 3: Create a New Lambda function

You can allocate up to 10 GB of memory to a Lambda function. That means you can now have access to up to 6 vCPUs in each execution environment. In this way, your multithreaded and multiprocess applications run faster.

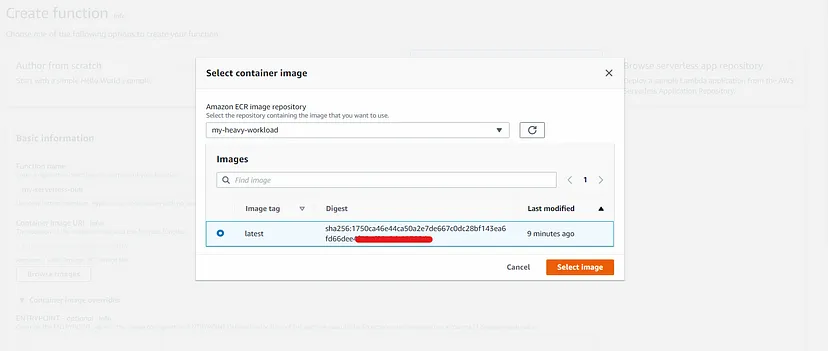

Head over to AWS lambda and click on the “Create New lambda function” button. Here you would be required to select Container Image as the deployment method. Moving forward you would be required to choose a Docker Image. We will select the latest image and proceed to create a new function.

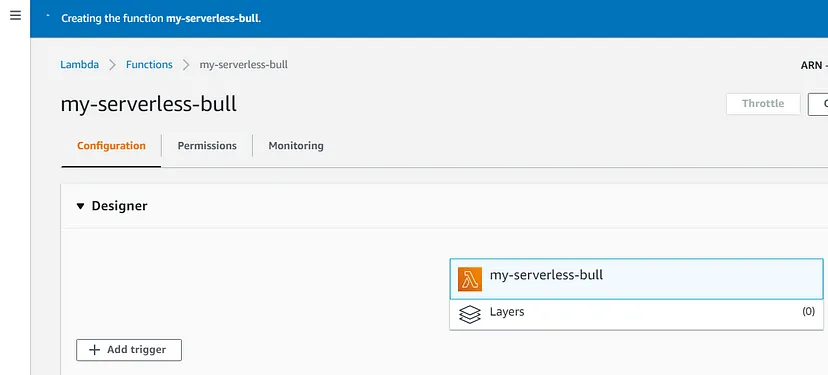

It might take form 10 to 30 seconds to provision your lambda.

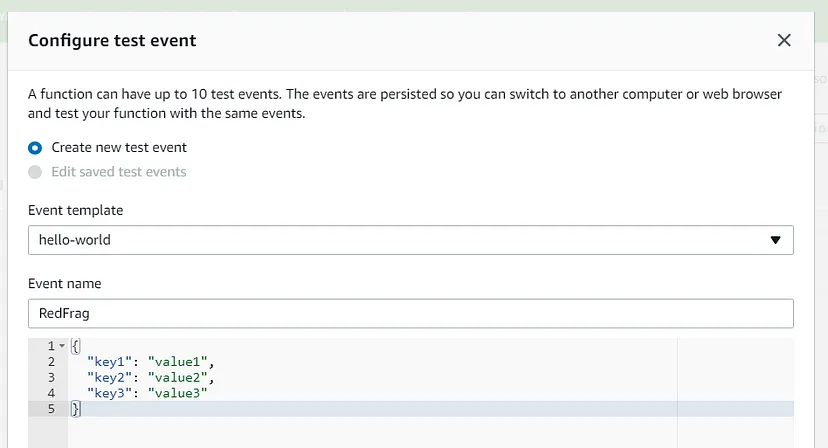

Once the processing is done go ahead and create a new test event. We will use this to test our execution environment.

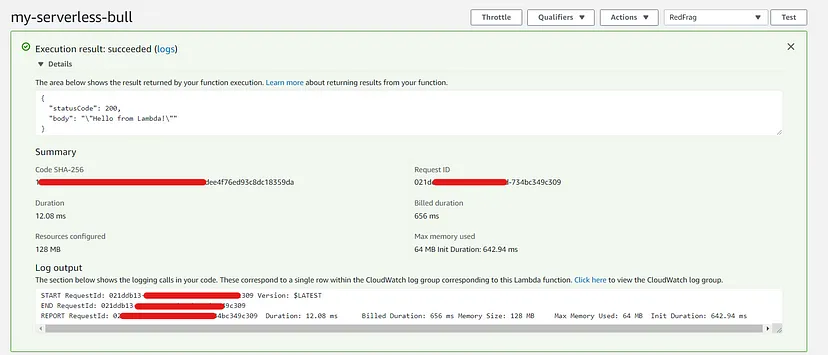

Now you are all set to run your First docker on lambda. Click test to run your code. If I configure 5 GB of memory, I have the same costs as when I have 1 GB of memory (about $61 for one million invocations), but the function is 5x faster. If I need lower latency, I can increase memory up to 10 GB, where the function is 7.6x faster and I pay a little more ($80 for one million invocations).

Further, you can use all default lambda execution triggers to invoke your task. You can also put this behind the API server to serve as a microservice, use it to process data sets, etc. You can also attach IAM Roles, EFS, invocation policies, etc. for file storage and access control and POLP implementation. For network access, you can attach it to a VPC. Add X-Ray to monitor performance and Usage.

Read More:

- New for AWS Lambda — Functions with Up to 10 GB of Memory and 6 vCPUs | AWS News Blog (amazon.com)

- GitHub — aws/serverless-application-model: AWS Serverless Application Model (SAM) is an open-source framework for building serverless applications

- Creating faster AWS Lambda functions with AVX2 | AWS Compute Blog (amazon.com)

- New — A Shared File System for Your Lambda Functions | AWS News Blog (amazon.com)